I thought it was bad enough when Tim Burton and a poorly configured WP-Super Cache teamed up to kill our sites last month, but I never thought that Google would be responsible for killing 2 of my sites in 1 day.

I thought it was bad enough when Tim Burton and a poorly configured WP-Super Cache teamed up to kill our sites last month, but I never thought that Google would be responsible for killing 2 of my sites in 1 day.

Yesterday two of our sites simultaneously started throwing 500 errors every time we tried to access them. Last month we had a similar problem but it happened across 3 of ours sites due to a poor cache configuration when Daily Shite was having a massive traffic spike. That issue was resolved and steps were taken to ensure it didn’t happen again. I knew we weren’t having any unusually high traffic so my first thought (after checking the cache was working properly) was that the MySQL server was on the fritz.

How wrong I was.

After some work and some back and forth with the ever helpful Dreamhost, it was determined that the culprit was an incredibly industrious Googlebot (66.249.67.198) which had taken it upon itself to go ape and crawl our sites with the zeal of an axe murdering maniac at a naive virgin convention.

If this is happening to your site there are ways and means to stop Google from hammering your site into oblivion.

The first and easiest is to disallow all bots from crawling your site by adding the following to your robots.txt file, which goes in the root of your site:

User-agent: * Disallow: /

That will work but the problem is that it may also prevent your pages turning up in the search engines over time as your pages will not be indexed.

What you really want to do is slow down the crawling to reduce the load by placing something similar to this in the robots.txt (Lots more information on on robots.txt here):

User-agent: * Crawl-Delay: 3600 Disallow:

The “Crawl-Delay” command will slow down most good bots such, as the crawler used by Bing, but Google ignores the command so will continue to hammer your site regardless. That is why is is imperative that you sign your site up for Google Webmaster tools today (it’s 100% free).

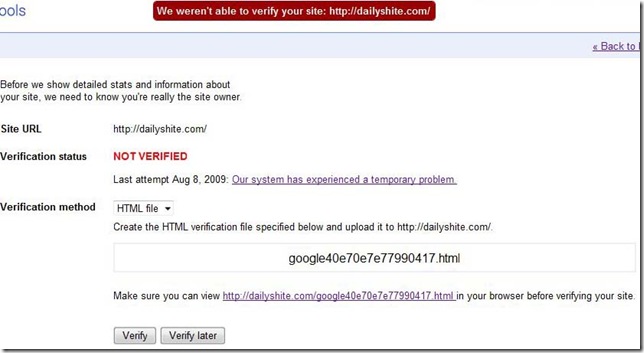

Once you’ve signed up with Google Webmaster tools you verify your site by either adding a meta tag to header of your sites pages or by uploading a specific HTML file to your server which Google then checks to verify your ownership of the site.

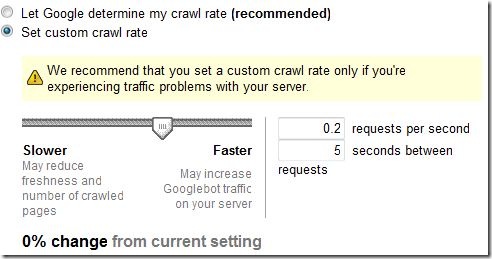

Once your site is verified you can then go in and lower the crawl rate of your site to reduce the load, get your site back up and give you some working room.

The reason why you need to sign up now, besides being able to register a sitemap to improve crawling and SEO, is that if your site is being hammered and your server resources are stretched to the limit, then Google won’t be able to verify the site as you’ll probably be serving cached files without the meta tag in place or your site will crash trying to generate a new page with the tag in. Either way – not good!

No matter what kind of load your site is under the HTML file verification should work, but it didn’t for us yesterday when attempting to verify Daily Shite (PaulOFlaherty.com was already verified) as Google kept throwing an error.

If we’d had all of our sites registered with Google Webmaster tools, we would have been able to reduce the crawl rate across the board straight away, stopped that Google bot in its rampant tracks and had considerably less downtime.